John von Neumann may well have said 'with four parameters I can fit an elephant and with five I can make him wiggle his trunk' but the quote itself comes from Freeman Dyson quoting Enrico Fermi quoting John von Neumann (Nature v427 p297). Fermi was gently steering Dyson away from Dyson's pseudoscalar meson theory, which had introduced arbitrary parameters in order to make Dyson's results fit Fermi's measurements. The elephant quote was about non-physical model parameters. It was never about physics-based models although my physics teachers always told me it was.

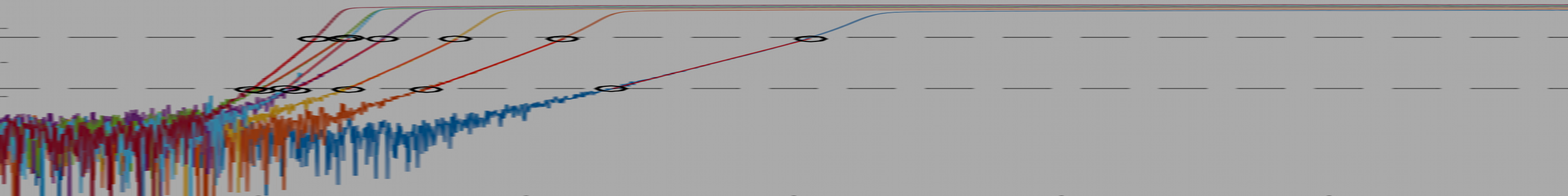

My group's research optimally combines (i) physics-based models, (ii) physics agnostic models, and (iii) data by quantifying their information content, addressing the question of how physical scientists can work most effectively in an age of abundant data. The key insight is that, if you want to extract information from data, you have to start by asserting something about what the data contains.

Gregory Bateson, the anthropologist and philospher said 'no organism can afford to be conscious of matters with which it could deal at unconscious levels.'

Humans have evolved to be extraordinarily good at extracting information from poor data, particularly when it is visual or aural. Think how quickly we learn to understand speech, drive cars, and identify visual patterns, and how difficult it has proven for computers to emulate this.

How much data do the lego bricks below contain? Not much. But with the strong prior knowledge encoded within your brain, I bet you know exactly what it is. De-focus your eyes and hover or click on the image. Can you read the text?

If your brain works like mine, it was projecting your prior memory of a dollar bill onto the above image and assessing how well the data matches your prior. My research group does the same thing, but with physical laws rather than human memory.

We use the fundamental and rigorous framework of Probability theory, starting with some user-defined certainty about what data contains, usually encoded within partial differential equations. This is ideal for the physical sciences because we know that data was generated by systems obeying physical constraints such as conservation laws, with parameters that we already know approximately, such as fluid viscosity.

This is not new. It is the standard scientific method written as a Bayesian inference problem. Our main contribution is to accelerate this by orders of magnitude using adjoint methods.

Bayesian inference has been applied to fluid dynamics before but rarely with adjoint methods, meaning that it has been too computationally expensive for most applications. Acceleration with adjoint methods is the crucial enabler.

Adjoint methods are to physics-based models what automatic differentiation is to neural networks: they provide, in one calculation, the derivatives of model outputs with respect to all parameters, accelerating data assimilation by orders of magnitude. They are technically difficult to implement, however, which may explain why they have rarely been used for Bayesian inference until now.

With adjoint methods, data can be assimilated into physics-based models containing several thousand parameters, producing quantitatively-accurate physically-interpretable models that require less training data than neural network models and extrapolate successfully in directions in which the physics holds.

Furthermore, if the physics is not known or cannot be modelled, Bayesian inference provides the framework to combine physics-based models with physics-agnostic models such as neural networks.